Modeling spatiotemporal dynamics with neural differential equations has become a major line of research that opens new ways to handle various real-world scenarios (eg., missing observations, irregular times, etc.). Despite such progress, most existing methods still face challenges in providing a general framework for analyzing time series. To tackle this, we adopt stochastic differential games to suggest a new philosophy of utilizing interacting collective intelligence in time series analysis. For the implementation, we develop the novel gradient descent-based algorithm called deep neural fictitious play to approximate the Nash equilibrium. We theoretically analyze the convergence result of the proposed algorithm and discuss the advantage of cooperative games in handling noninformative observation. Throughout the experiments on various datasets, we demonstrate the superiority of our framework over all the tested benchmarks in modeling time-series prediction by capitalizing on the advantages of applying cooperative games. An ablation study shows that neural agents of the proposed framework learn intrinsic temporal relevance to make accurate time-series predictions.

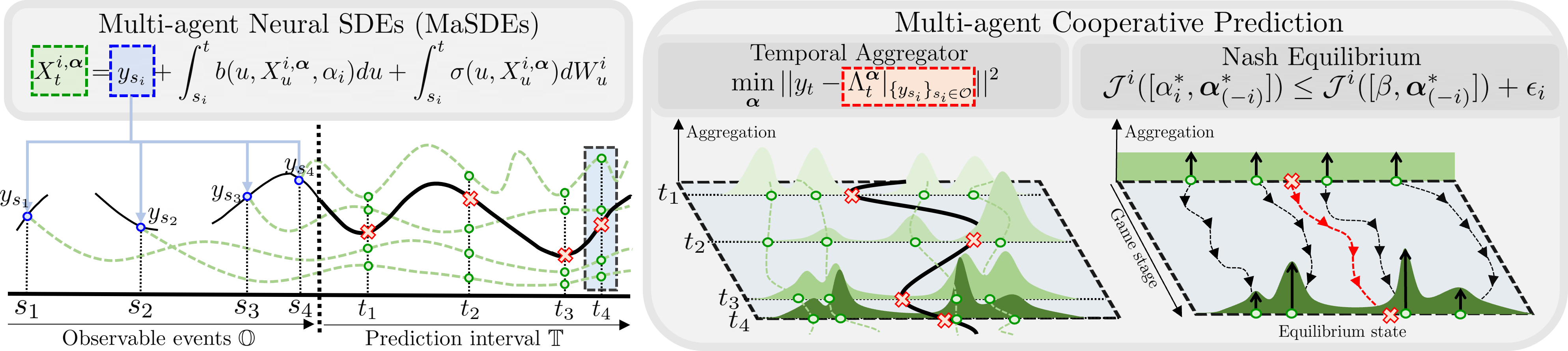

Each agent individually encodes the impact of a partial observation into an underlying stochastic trajectory

and interacts with each other to extract meaningful information to predict the future.

We formulate the collaboration among agents from the view of cooperative differential game to intergrate the

individual information and adaptively balance the importance of each agent. As a results of the differential game,

cooperative agents achieve the Nash equilibrium and agree to suppress non-informative observations and highlight the

contribution of important observations for accurate prediction.

We present a novel framework built upon a game theory to model temporal dynamics of time-series data. More specifically, we extend the conventional differential equation (DE) to the multi-agent counterpart for decomposing the observational time-series. To the best of our knowledge, this work is the first attempt to adopt a philosophy of game theory in dealing with multivariate time-series. For tractability of applying differential games, we propose a novel gradient descent-based algorithm called deep neural fictitious play, which operates in a tractable and parallel way to obtain the Nash equilibrium. Theoretical results based on the Feynman-Kac formalism follow to guarantee the convergence of the proposed algorithm and explicate the temporal relevance of past and future. Our approach includes the following crucial components:

Multi-conditioned Score-based Predictor: Since each neural agent can only represent the individual impact of partial information, we aggregate the individual decisions made by each neural agent to capitalize on the temporal dynamics available from the entire set of past observations. Inspired by recent work Score-based generative model (SGM), we introduce the multi-conditioned score-based predictor that produces the collaborative prediction between neural agents:

Stochastic Differential Game: To achieve efficient coordination among interacting agents and optimize temporal aggregation, it's essential to have a unified structure that can effectively extract useful information from time-series data. To meet these needs, we propose a novel framework that formulates the time-series prediction problem as a cooperative differential game, where each neural agent balances its individual costs to achieve a Nash equilibrium:

Deep Neural Fictitious Play: Our approach to approximating the Nash equilibrium involves a gradient descent based fictitious play algorithm with Forward-Backward Stochastic differential equations (FBSDEs). This allows us to make use of neural network models for control agents, which offer sufficient expressivity for the task at hand. By approximating the solution to non-convex HJBEs that support the neural network structure, we are able to successfully find the Nash equilibrium. Furthermore, under our proposed training scheme, our proposed MaSDEs with a large capacity ensure small marginals to the Nash equilibrium.

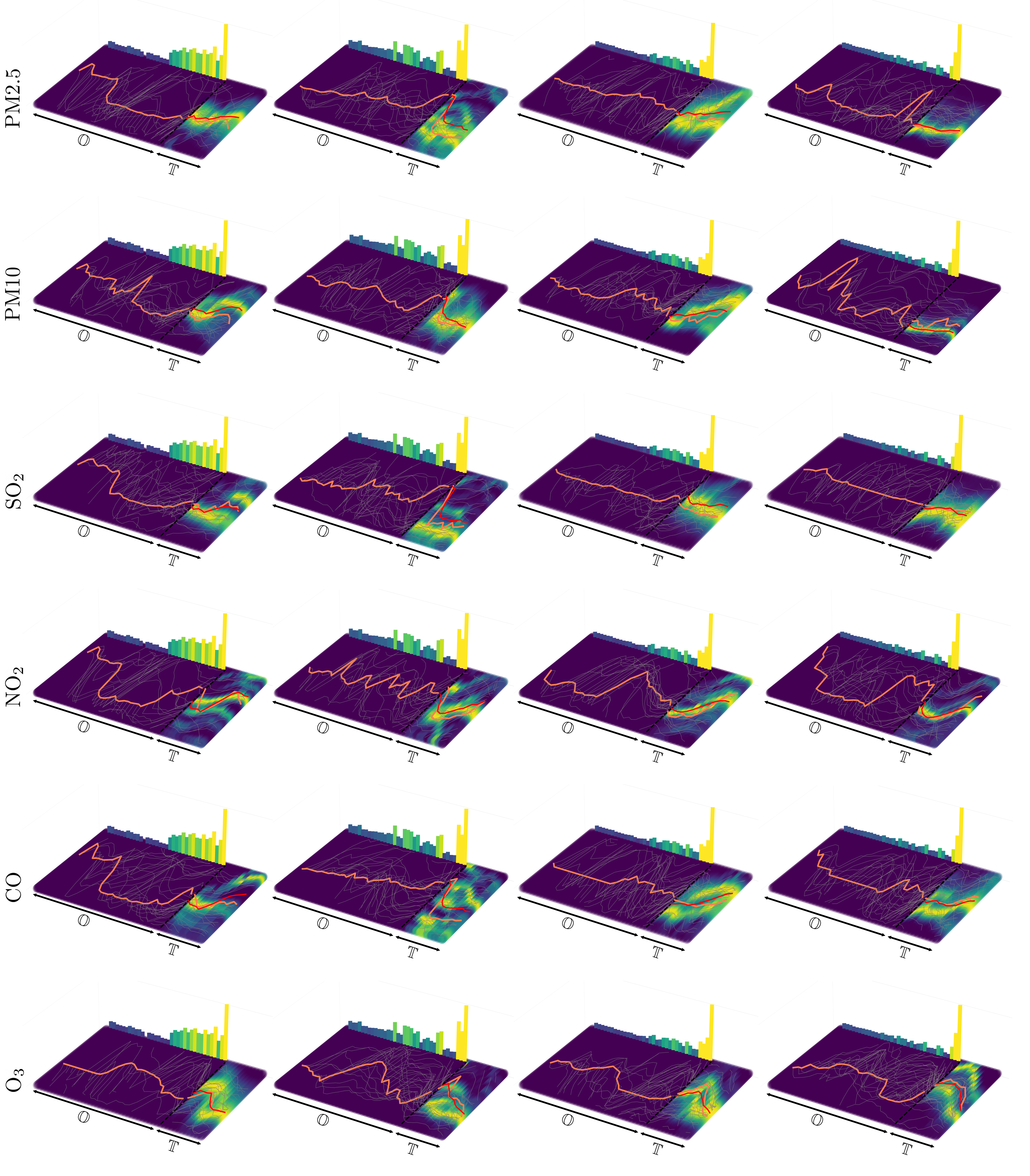

Predictive Performance on Time-Series Features of the BAQD Dataset,

Including PM${2.5}$, PM${10}$, SO$_{2}$, NO$_{2}$, CO, and O$_{3}$.

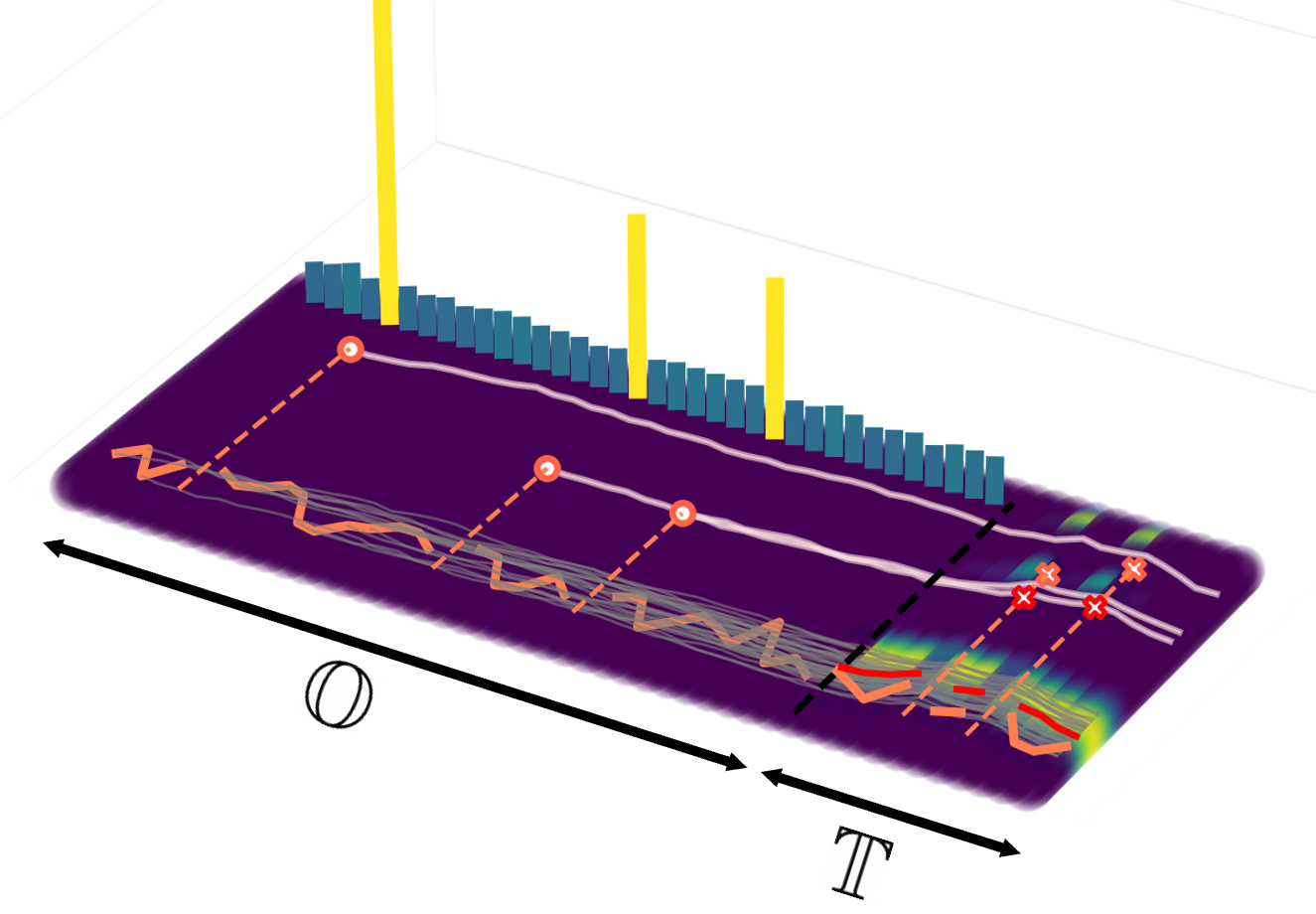

How modeling physical phenomenon with point processes is common in various domains, such as finance and geology, and how Gaussian-impulse noises can be used to mimic noisy events that occur at random times. The experiment involved generating data using a homogeneous Poisson process and using neural agents to accurately restore random peaks. The proposed neural agents were found to learn different temporal dynamics from the data and to filter out redundant observations, which helped them focus on informative signals for accurate predictions.

Gaussian impulse signal from a homogeneous Poisson process.

This webpage template was adapted from here.